Blog - Business Environment

A Unified Path to Responsible AI Transformation

As you’re reading this, ask yourself the following: Do you know how to practically and optimally integrate AI into your daily workflow (without compromising the organisation’s data security)? Do you know what AI Compliance Usage entails? How certain are you your employees and co-workers are any wiser?

The most important question leaders should be asking today is not about whether their organisation should adopt artificial intelligence. What they should be asking is far more critical to success:

Is the organisation equipping people effectively to adopt and use generative AI (GenAI) solutions, maximise opportunities, and negate critical threats?

Understanding Responsible AI

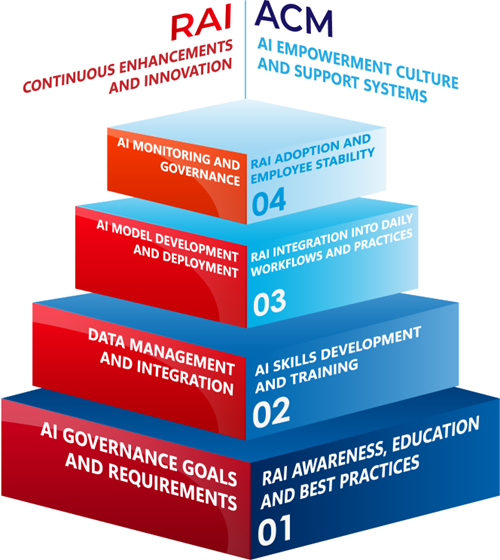

Responsible AI refers to the development and deployment of AI systems that are fair, transparent, accountable, and respectful of user privacy. It’s essential for organisations to adopt Responsible AI to mitigate risks such as bias, discrimination, and privacy violations. By doing so, they can build trust and ensure the ethical use of AI technologies.

Integrating Adoption and Change Management with AI adoption initiatives ensures that the technological advancements brought by AI are effectively harnessed and aligned with organisational goals. This holistic approach not only enhances the success rate of AI projects but also ensures that the workforce is engaged, trained, and ready to embrace the future of work.

The Role of Employees in Responsible AI

Employees are the backbone of any organisation, and their involvement is instrumental in fostering Responsible AI from the get-go. For this to be a success, they need:

- Awareness and Education: Employees need to be educated about AI technologies and their ethical implications. This includes understanding how AI works, its potential benefits, and the risks associated with its misuse.

- Active Participation: Employees can contribute to AI projects by providing valuable insights, annotating data, and offering feedback on AI systems. Their diverse perspectives can help identify potential biases and improve the overall quality of AI solutions.

- Ethical Decision-Making: Empowering employees to make ethical decisions and voice concerns about AI applications early on is crucial. This can be achieved by creating a culture of openness and providing clear guidelines on ethical AI practices.

Empowering Employees:

Where does one start with effectively empowering your employees? In a world awake to AI’s impact, skill building is no longer simply a perk for employees — it’s a priority for organisational success. Start thinking how leadership can support:

- Training Programs: Develop comprehensive training programs that cover the basics of AI, its ethical implications, and practical applications. These programs should be accessible to all employees, regardless of their technical background.

- Inclusive Culture: Foster an inclusive culture where diverse perspectives are valued. Encourage collaboration between different departments and create forums for open discussions about AI ethics.

- Tools and Resources: Provide employees with the necessary tools and resources to engage with AI responsibly. This includes access to AI ethics guidelines, reporting mechanisms for ethical concerns, and platforms for continuous learning.

- Authentically integrating feedback into business goals

How is this currently being tackled by other organisations?

Several organisations have successfully integrated Responsible AI with the help of their employees:

- Example 1: Microsoft’s AI Ethics Training: The leading tech company implemented a comprehensive AI ethics training program for its employees. This program included workshops, online courses, and interactive sessions with AI experts. As a result, employees became more aware of AI’s ethical implications and actively contributed to developing fair and transparent AI solutions.

- Example 2: A financial services firm created an AI ethics committee comprising employees from various departments. This committee regularly reviews AI projects, identifies potential ethical issues, and provides recommendations to ensure responsible AI practices.

The proof is out there:

This year’s LinkedIn Learning & Development (L&D) Report 2024 highlights the critical role of human-centric strategies in the AI era. Key findings support:

- Career Development as a Priority: Career development has surged as a top priority for L&D, moving from ninth to fourth place in just one year. This shift underscores the importance of aligning learning programs with business goals to drive organisational success.

- AI Skills and Career Growth: The report emphasises that AI skills and career development are essential for both employee and company growth. Employees are motivated by career progress, and organisations that invest in AI skills development see higher retention rates and more internal mobility.

- Learning Culture: Companies with strong learning cultures experience better retention, internal mobility, and a healthier management pipeline. This highlights the importance of fostering a culture where continuous learning and development are prioritised.

As AI continues to evolve, User Enablement sits at the centre of organisational agility, delivering business innovation and critical skills. Employees in turn, are key enablers of Responsible AI in the modern workplace. By educating and empowering them, organisations can ensure the ethical use of AI technologies and build a culture of trust and transparency.

Remember, Change is a journey. Let’s take it together.

For more information, please contact our team at This email address is being protected from spambots. You need JavaScript enabled to view it.